Abstract

In this post I will investigate the Leibnizian idea of a Characteristica Universalis from a comparative point of view on two diverging paradigms on computation that can be distinguished, as I will argue, to have emerged since the end of the 19th century. While algebraists like Augustus de Morgan, George Boole, Charles Sanders Peirce and Richard Dedekind were working towards a symbolic-algebraic constitution for a theory of quantification and formalization, logicists like Georg Cantor, Gottlob Frege and Bertrand Russell have worked on an arithmetical paradigm that has become the main reference for most of the theories on computation today. The point of divergence, I will argue, revolves around different philosophical conceptions of „number“, especially those that distinguish and relate natural, rational and complex numbers. We can literally speak of a point of divergence here, because the two lines I am pointing out manifest themselves in clearly contoured fashion in the writings by Frege and Dedekind on the foundation of arithmetics. These two main protagonists have very early on recognized the problem of finding a sound conceptual foundation for the then emerging, technology driven power of applied calculation; yet they were pursuing mutually ,inverse‘ conceptions of how to map the set theoretical scheme of cardinals and ordinals onto a well-founded concept of natural numbers. For Frege, they were on the cardinality side of quantities, while Dedekind placed them, as conceptually sound definition, on the ordinality side of how to treat quantities. Such a perspective puts new light also on the idea that seems to have driven Leibniz‘s notion of a Characteristica Universalis, which according to him was supposed to replace older philosophical notions of universal forms, or their approximatable representations.

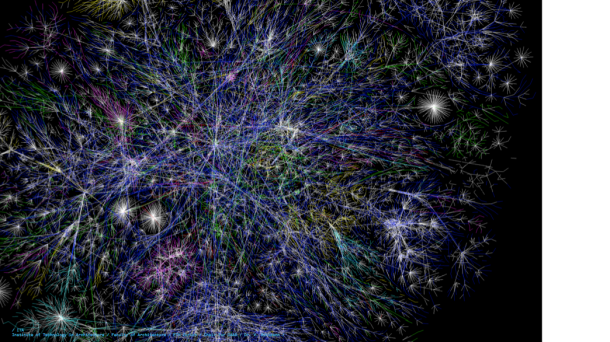

This may well sound like a highly specialized sophistication in historical reconstruction, but what makes such considerations both relevant and interesting today is related to our contemporary computing power based on algorithms and parameters. Digital code is symbolic and algebraic, we are not, strictly speaking, computing numbers. We are computing symbols. While the main obsession and challenge to a concept of numbers has always been that of the Every, of the countable, the main obsession and challenge of algebraic symbols has always been that of the Any, of that which can be expressed in a calculateable, demonstrateble, constructible way. Jules Vuillemin has, in his seminal book La Philosophie de l‘Algèbre (1962) provided an outlook of how we could today, based on the empirical grounds of digital computation, work towards an ontology of general forms in combination with the necessity of doctrines with respect to view on the world, in a manner that shares many of the aspirations with Leibniz and in a way that has not received much attention yet throughout 20/21st century philosophy.

* This post relies much on a paper delivered at the 1st International Congress for New Researchers in History of Thought.: „Intellectual Creations regarding the Book of Nature“ held at the Faculty of Philosophy of the Universidad Complutense de Madrid, March 6th to 9th 2012.

Mathesis, Geometria Speciosa, La Cosa, Topics – setting the stage for a theatricality of the pre-specific

Car si nous l’avions telle que je la conçois, nous pourrions raisonner en metaphysique et en morale au peu pres comme en Geometrie et en Analyse. (Leibniz 1690)

If we had it [a characteristica universalis], we should be able to reason in metaphysics and morals in much the same way as in geometry and analysis (Russell 1900)

The interest in a mathesis, a universal method, together with an interest in an architectonics of thinking, this might appear as being of historic interest only, if anything at all, today. Instead of thinking about an architectonic structure of our reasoning and capacities of learning, it is much more common to investigate the structure(s) of knowledge and to explore our mind in a positivist fashion. You can read, as a subtext to this post, my concern about a seeming willingness to pay the price of actually unlearning thinking today – for the shallow benefit of buying the possibility to simply administrate knowledge, objectively. As if knowledge had not always and in essence, been gained from learning. Many are willing to make technology responsible for such seemingly increasing sterility and speechlessness between the human faculties, and for the associated tendency towards a radical bureaucratization of reason instead of further differentiating its architectonic disposition. I would like to convince you in the following that the most such a stance reveals, if anything at all, is a certain fatigue of thinking.

After making the position and stance explicit from which this post is formulated, let me start by antedating the nucleus of my basic line of argumentation: Between Leibniz‘s statement in the late 17th century, and Russell‘s translation of it in the early 20th century, these same words have come to mean radically different things. And this even though one is meant to be a mere translation of the other. The main reason for this, as I would like to argue, lies in what we hold reasoning in geometry and analysis to be like. Russell, the main propagator of Frege‘s logicist program of arithmetization, thought reasoning in geometry and analysis ought to be grounded in clearly defined classes of numbers, and that the natural numbers should play a special role thereby. Leibniz, on the other hand, had celebrated not the idea of objectively determinable number classes, but the idea of the monas as the springing, incessant source of numbers. Without going into any details yet, we can perhaps safely say that what has changed between them is the role of algebraic symbols.

But let us not foreclose things, and start instead with the important invention of symbolic objectivity. Let us ascribe this idea, that of algebraic objectivity, perhaps most likely to Descartes. His great invention was to postulate an experimentally, mathematically accessible realm, where ideas can be tested – if we only follow some certain rules to give orientation to our thought – this great idea has been, initially, precisely this, an idea taken seriously. An idea worked out into such form that the conditions for proving what it claims, are established and can be accessed by anyone. Thought that has turned objective in this manner is thought to be representing things as they really are. It is not, as was held before, presenting things to the mind as they had been experienced. The role of symbols has transformed. Symbols are not, ultimately, marks of affections of the soul anymore, as Aristotle had it. They are now letters that can encode arbitrary content. As such they cannot only present a gone bye experience anew, mentally, but they can provide a general structure for such „presentability“ – which thereby becomes separated from the former basis on lived experience. There is, suddenly, an objectivity to symbolic encodings that allows the encoded to be referred to and represented purely generally, independent of any individual experience. And this is my main concern with opening up a gap between the two citations, Leibniz and Russell, which needs to bridge more than an inevitable caesura by translation.

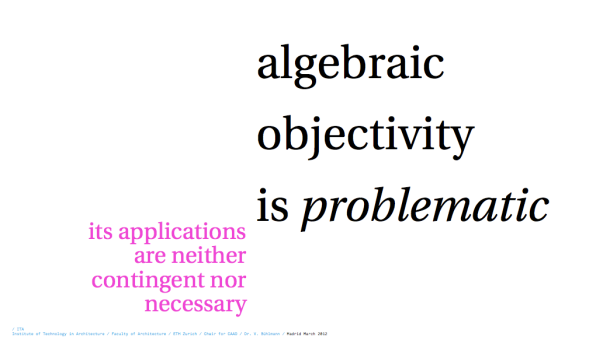

Certainly, symbolic calculation is our reality today. Yet symbols are not numbers. Symbolic calculation treats unknown and known quantities in order to determine the unknown in such a way that a problem can be solved. I follow here the position of Gilles Deleuze, who views ideas as the objective being of problems-as-problems. The metaphysical preoccupation with being-qua-being, or its modern logicist guise as set-qua-set, hence, is given up, by this stance, for a novel preoccupation with the problematicity of categoricity. What this means, in somewhat easier terms, is the postulate of an ideality as the empirical grounds for an experimental science within the abstract, which aims at finding sustainable, integrateable and situation-specifically optimal representations of problems. Because problems can only be solved through the possibilities their representations allow for. If we chose a simple representation for a problem, we get a range of simple solutions. The more complex a representation we affirm, the more varied the solution space we find ourselves confronted with. The difference, I would like to point out in the following, between Leibniz‘ speaking of a characteritica universalis and Russell speaking of it, lies in the latter‘s assumption that the question of what can be calculated in principle needs to precede the decision for a certain level of complexity one is willing to engage with, while the former certainly did not do so. For Leibniz‘ the algebraist, the universal was not where we find the general templates for true classification. The universal for him is the springing source of numbers, the monas, which allows us to harvest the means for characterizing and cultivating the variety of being in an engaged way, neither receptive, nor fully in control. The universal empowers us for smart diplomacy, as Leibniz very well knew. If we determine being as the unknown quantity within our investigations, if we strive to determine being algebraically, the notion of computability is a radically different one from that which is predominant today in the Church-Turing tradition. The relevancy of Leibniz‘s idea of a characteristica universalis lies in opening up the vast field of what we call non-Turing computing. For such a notion of computing as well, computable is what is objectively determinate. Yet objectivity, in such a position, is what is genuinely problematic – not in the sense that its problematicity could be solved and cleared away only to reveal its pureness as universality. Quite differently. Objectivity is problematic in the sense that the representations of it – as a specific problem – are possible in such and such many ways – each granting specific solutions – yet without an unproblematic hierarchy as to what may be the necessary solution. Ideality, as the objective being of problems, is in principle fully determinate – hence its objectivity – yet it is never actually fully determinable.

I would like to call this modality of the objective being of problems the virtual. The virtual is to be understood, as I would like to present in the following, as the peculiar modality proper to “the new continent” discovered by abstract algebra, as E.T.Bell has put it in his Development of Mathematics (1946). While calculation has been thought of as that which is capable of separating for us, uniquely and clearly, what of our reasoning may count as contingent and what may count as necessary, Algebra severely complicates things.

Hence certainly, symbolic calculation is our reality today. Yet symbols are not numbers, their objectivity is algebraic. Symbolic calculation treats unknown and known quantities in order to determine the unknown in such a way that a problem can be solved. The key issue, philosophically, for thinking about computability today, concerns a choice between two mathematical theorems, it concerns the choice which of the two theorems we should give primacy. These two theorems are the following.

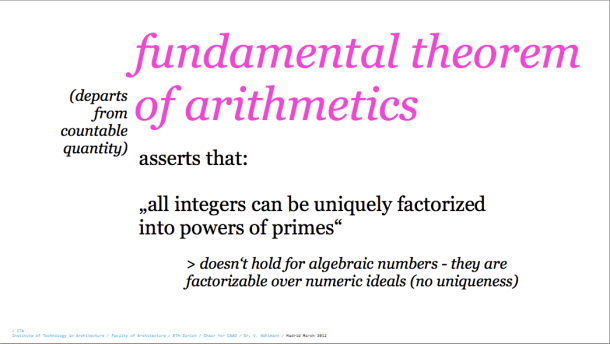

The fundamental theorem of arithmetics states that all integers can be factorized unambiguously into powers of primes. It grants solutions which are, arithmetically, necessary solutions to a problem – yet it can only treat problems which can be formalized into the number space of rational integers (even if the be allowed as infinitesimal approximations by reals). This reduces the amount of masterable unknowns in polynomial equations to the number of four, as this is the highest order which allows to solve equations by radicals and by factorization into rational integers (without involving the domain of complex numbers).

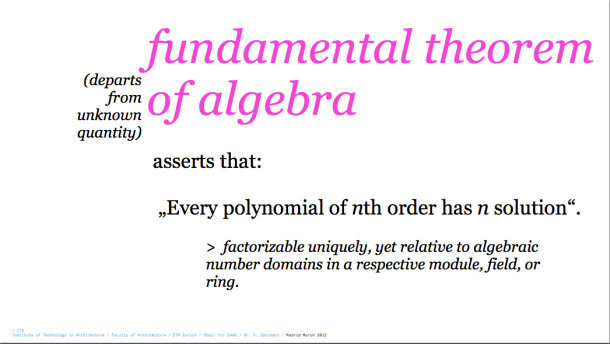

The fundamental theorem of algebra, on the other hand, states that any problem can be solved. In its formulation by Gauss, the theorem holds that polynomials of n degree can be solved, within the complex number space, in such a way that they resolve into n solutions. According to this theorem, the order of polynomial equations is virtually unlimited, which means that an unlimited amount of unknowns can be taken into account – in principle. Factually, this is where pragmatic issues of computer power play a role, yet may not be regarded as a principle problem.

We have a choice between which of those two principles ought to be given primacy over the other. Algebraic number theory has provided mathematics with a notion of Ideal Numbers which allows that the theorem of unique factorisation can be recovered also for conceptual domains of algebraic integers – even if only in a relative sense, relative to a field, ring or module of algebraic numbers. Arithmetic calculability turned out to be relative to the respective modules and rings of Ideal Numbers.

This move in 19th century mathematics, initiated by Gauss himself, and pursued by people like Kummer, Dirichlet, Dedekind and Kronecker, among others, led almost immediately to striking progress in the evolution of mastering electricity, and with it in developing a physical model of the atom, the evolution of chemistry, eventually quantum physics, and, most importantly, also information science. Algebraic numbers are basic for almost everything that characterizes our contemporary cultural lives – from progress in medicine, individual mobility, global economics, and democratized education structures, to name only a few of those vectors.

Yet the precise nature of these new mathematical objects, as well as the basis for their introduction and the range of applicability of the technique, was left unclear in the 19th century, and still is unclear today. It is what drove the interest in the foundational questions of mathematics throughout the late 19th and early 20th century, and it is what drives the interest in understanding the potentials and the limits of calculability and computability today.

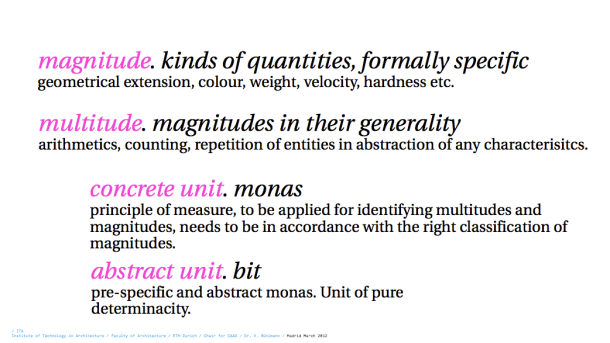

But let us at least touch on some of the issues at stake. A quantity was always thought to have a double make up, ever since Pythagoras, Platon, Aristoteles, Euclid and Eudoxos started to explicitly reflect on quantities: magnitude is called what can be measured, and it typically comes in different kinds which cannot easily – that is, only by analogy – be put in relation with each other; multitude, on the other hand, is the property of being enumerable, countable, and it is completely independent of any question of kind or nature. While quantities as magnitudes have proportion, quantities as multitudes have ratios. Or to put is differently, while one aspect of quantities ask how much, and hence looks at specific grounding and integration, the other aspect of any quantity asks how many, and looks at generalization. As long as people did not calculate within the rational number space, the ratios of the multitudes have unquestionably been thought to label the proportions of the magnitudes. The order behind the proportionality was thought to be divine – it was thought to be making up the book of nature (Galileo?). As long as proportions and magnitudes are the grounding level to refer to, this book is thought to be written in language and geometry. With the rise of higher algebra, this very relation between magnitudes and multitudes, when treating quantities, becomes obscured. It would certainly not be too dramatic a thing to say that what characterizes modern science most, is the release of a whole bunch of properties and characteristics on the level of magnitudes that were literally invisible before they could be calculated. Among the most important among these new releases in knowledge by the modern rationalization of the proportional order of things are, in the political realm, advances in map-making, cartography, and the introduction of measuring standards more generally, in the economic realm the calculation with interests and capital, in the technical realm thermodynamics and the cleaning of fossil energy resources. The order of things, on the level of the magnitudes and proportionality, could hence be inferred and realized from empirical experiments, and needed no longer be deduced categorically, dogmatically.

Science has become political, put in the service of the emerging new political world order of the nation states – a statement which can perhaps best be illustrated by the proclamation of the French Academy in 1788 that the classical problems of mathematics should no longer be credited within institutional science:

„The Academy took this year the decision to never again consider a solution for the problems of doubling the cube, trisection of an angle, squaring the circle and of a machine of claimed perpetual movement. Such sort of researches has the downfall that it is costly, it ruins more than one family, and often, specialists in mechanics, who could have rendered great services, and used for this purpose their fortune, their time and their genius.“ 1

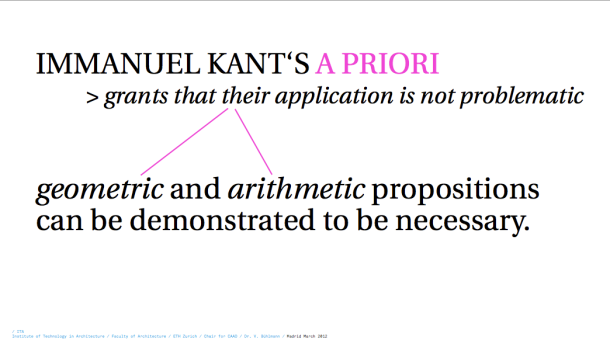

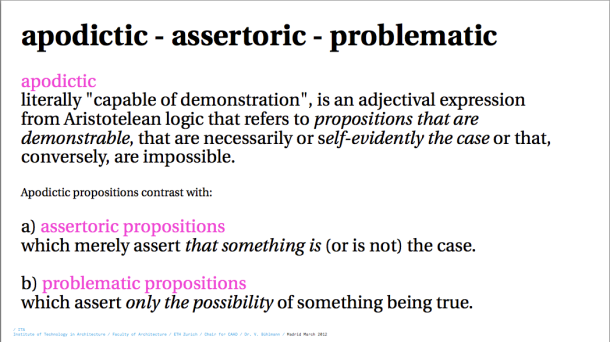

Quite obviously, such issues have been raised anew throughout the 19th century. The issues previously contained within metaphysical questions in mathematics have started to surface in a different guise: the question now came to be on which ground to distinguish reasonable research from non-reasonable research. Shall we seek for abstract, proof-theoretic foundations, or restrict ourselves to mechanically testable foundations? If the former, shall we assume for abstract reasoning a synthetic, explanatory role, as Kant had suggested, and if yes, on what grounds?

Kant‘s relying on intuition had clearly become problematical by the new abstract objects involved in the mathematics at issue. Or shall abstract reasoning be allowed an ampliative role? If yes, how can we call it analytic, then, in any non-engendering sense, how can we still hold on to thinking analytical thought leads us to what we have to assume as necessary rather than contingent? Or shall we, after all, decide upon an intuitionistic, constructivist point of view which grounds analytical inventions, if they are to be credited acceptance out of purely pragmatical considerations, on the grounds of objectively reproducible mechanical algorithms and computation? If this seems like a reasonable stance, the consider this. How do we deal with the breeding of solutions by genetic algorithms, which on statistical basis of population dynamics are capable of calculating solutions which are not, strictly speaking, reproducible?

*cited from: Ludger Hovestadt, Beyond the Grid, Information Technology in Architecture (Birkhäuser 2009)

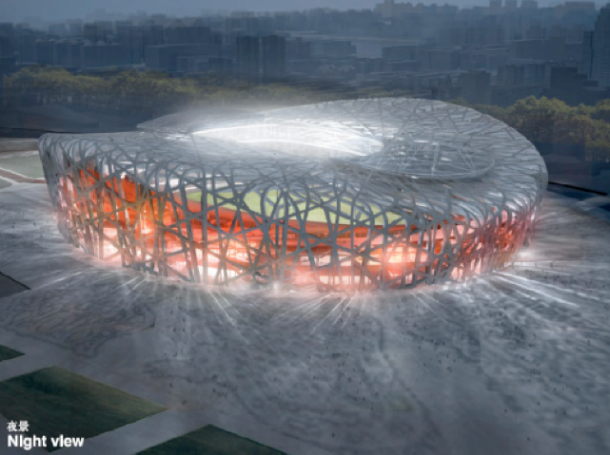

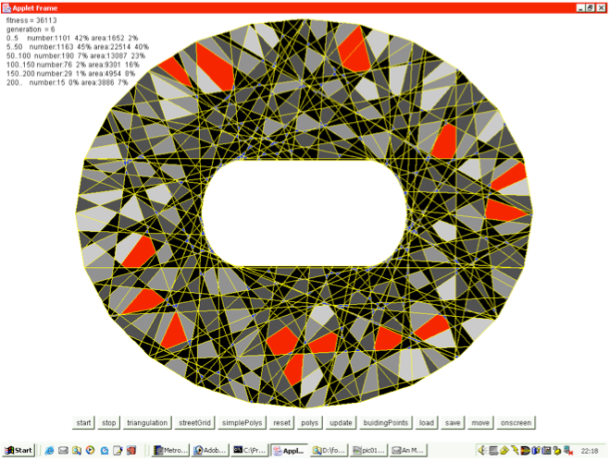

Let me detour a little and tell you of the relevancy of these questions today from an applied perspective. What you see on this slide is the rendering of Herzog & De Meuron for their olympic stadion in Bejing, with which they won the international competition. The problem was that this image is a rendering, and turned out not to be translatable by analytic means into a buildable structure. Whenever the gaps were right at the front side of the structure, there were new gaps appearing on the structures‘ back side. It is a classical topological problem for construction which arises if things are networked. What our team now did was specifying the solution needed, and writing a program that breeded the specified solution algebraically by scanning the problem space over many iterations, while eliminating, in an approximative procedure, all those solutions that did not match the desired outcome. In the end, after a few days computation time, there was a solution which could be analytically tested to withstand all physical and constructibility related issues. Yet, this solution cannot be reproduced. If the algorithms are run a second time, the concrete model in the end will not be identical to the first one. But it will also withstand all the relevant tests.

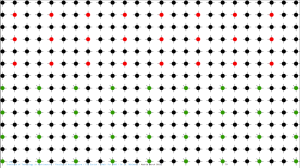

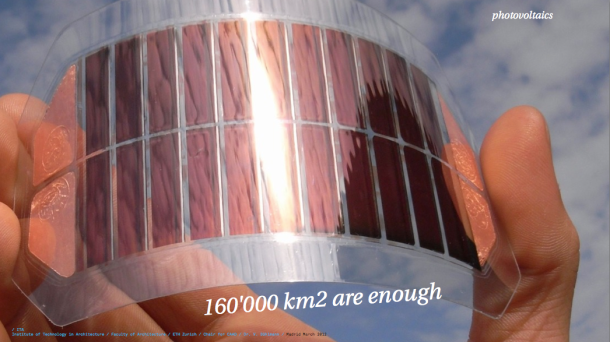

Why do I call it the algebraic book of nature? Solar cells are produced by changing the material structure of their substrate in such a way that it garners the beams of the sun and turns their light into electricity. Very literally, the substrate material is manipulated by injecting boron and phosphor atoms into its grid, a procedure which is called doping. The production of such cells strikingly resembles printing procedures as we know them from dealing with text on paper. With the great difference, of course, that these print-products do not represent anything, they are functionally operative. What you see here is a solar printer that produces PV cells on the meter, just like all the electronics we use for computers today is produced as well.

„Analytical doctrine or algebra – called „cosa“ in Italy – is the art of finding the unknow magnitude, by taking it as if it were known, and finding the equality between this and the other given magnitudes” [2]

Marco Aurel, a Spanish mathematician around 1520, in his Libro primero de Arithmetica Algebratica put forward a similar idea:

„I say that, by that rule, to ask a question you have to imagine that such a question has already been asked, and answered, and that you now want to prove it.“

The challenge Algebra poses to thought, hence, consists in inventing the conditions and assumptions necessary to establish the provability of what we wish to analyze. Let us cite another voice of how the potential of symbolic algebra was received throughout the 17th century:

„One may distinguish between vulgar and specious [Viète‘s] algebra. Vulgar or numerical algebra is that which is practiced by numbers. Specious algebra is that which exerts its logic by „species“ or by the forms of the things designated by the letters of the alphabet. Vulgar algebra is not limited by any kind of problem, and is no less useful for inventing all kinds of theorems as it is for finding solutions to and proofs of problems“ [3]

It belongs to the nature of such foundational questions that the scope they belong to and are of unfolding strikes us as incommensurable. Whether numbers are to be considered „free creations of the mind“ as Richard Dedekind or Charles Sanders Peirce were suggesting, or whether viewing them as having a being independent from our intellect, as Gottlob Frege or Leopold Kronecker held, the implications of both stances are impossible to overlook inductively. Eric Temple Bell writes in 1946 one of the finest commentaries on the problematics of science-turned-political I have yet come across:

„It will do the practical man little good to say that only a metaphysician would ask such questions. The historical fact is that numerous impractical men not only asked these questions but struggled for centuries to answer them, and their successes and failures are responsible for much by which the practical man regulates his life in spite of his impatience with all metaphysics.“ [4]

This is what makes the Leibnizian idea such an intriguing idea today. Different from people like Raymundus Lullus or Petrus Ramus before him, Leibniz did not seek a Topica Universalis, a mechanical device to help us, individually, determining the genuine concepts immediate to the language of our thought. Leibniz seems to have grasped the idea that if we can master, algebraically-mechanically, the Universal in Number, this does not relieve us from the complexities of learning. The universal language he envisaged, hence, was not given in the concreteness of concepts or words, but in characters. It would be trivial to state, on a technical level only, that the digital code today be such a characteristica universalis. True, we can encode anything into this universal format today – not only numerical formulas but also magnitudes (Analog/Digital Conversion). Yet this does not mean that what can be computed is, essentially, unproblematic. It is much more as if we find ourselves within a grand new scenery, a global and cross-cultural stage of abstract identities – a theatricality of the pre-specific – for whom the roles as societal and public personas yet have to be invented. If learning to understand an anthropocentric position, as political subjects, should have been – as many people claim – an invention of modernity, then surely learning to understand an anthropogendered position as symbolic identities is the challenge of the coming decades.

*the slide gives a translation of the so-called Pappus Problem (Pappus of Alexandria 290-350 BC)

I would like to finish with a statement by Gilles Deleuze from Difference and Repetition (1968):

„In this sense there is a mathesis universalis corresponding to the universality of the dialectic. If Ideas are the differentials of thought, there is a differential calculus corresponding to each Idea, an alphabet of what it means to think. Differential calculus is not the unimaginative calculus of the utilitarian, the crude arithmetic calculus which subordinates thought to other things or to other ends, but the algebra of pure thought, the superior irony of problems themselves – the only calculus ‘beyond good and evil’. This entire adventurous character of Ideas remains to be described.“

————————————————————————

[1] American Mathematical Society, The Millennium Prize Problems, published with the Clay Mathematics Institute, Cambridge Massachusetts, 2006.

[2] „La doctrine analytique, ou l‘Algèbre est l‘art de trouver la grandeur incognue, en la prenant comme si elle estoit cognue & trouvant l‘égalité entre icelle & les grandeurs données.“ Doctrina analytical quea Algebra & Italico vocabulo cosa dicitur, est ars qua assumpta queasita magnitudine tanquam nota, & constituta inter eam aliasque magnitudines datas aequalitate, invenitur ipsa magnitudo de qua quaeritur“ [ Hérigone, in his first chapter of Algebra 1634, II, 1].

[3] Hérigone, in his first chapter of Algebra 1634, II, 1.

[4] Eric Temple Bell, The Magic of Numbers, p. 22.

Pingback: The reciprocal double-articulation of »sustainability« and »environmentality« or The mode of »insisting existence« proper to the circular. | monas oikos nomos